CS 470 : Monitoring Bird Biodiversity

"Monitoring Bird Biodiversity" is the study of utilizing Artificial Intelligence to teach the computer to recognize Bird Calls from sound files. Artificial Intelligence (AI) is an incredibly new and expanding field that has been growing more and more as even more research has been done on the subject. As it pertains to Bird Biodiversity, the goal of our AI is to be able to loop through thousands upon thousands of hours of sound recordings, picking out bird calls and categorizing them by what type of bird they are. This is a very critical component of many important scientific studies that need to know how the populations of birds exist in the wild. Our project completes the first part in which we can accurately recognize most bird calls from a given input sound file.

- Begin with a 60 second sound clip (in .wav format).

- -We're operating off a database of thousands of 60 second .wav files already provided to us.

- Crop the X-axis to the area of the ROI

- -We only require the small window where the bird call takes place

- Convert it into a Spectrogram.

- -Spectrograms are representations of an audio file in a visual way.

- Pad the ROIs.

- -When training our image-recognition software (YOLO - You Only Look Once), it is important for it to be trained with some background noise.

- -This is because there could be various background noise cluttering the real audio and we want the algorithm to only find bird calls.

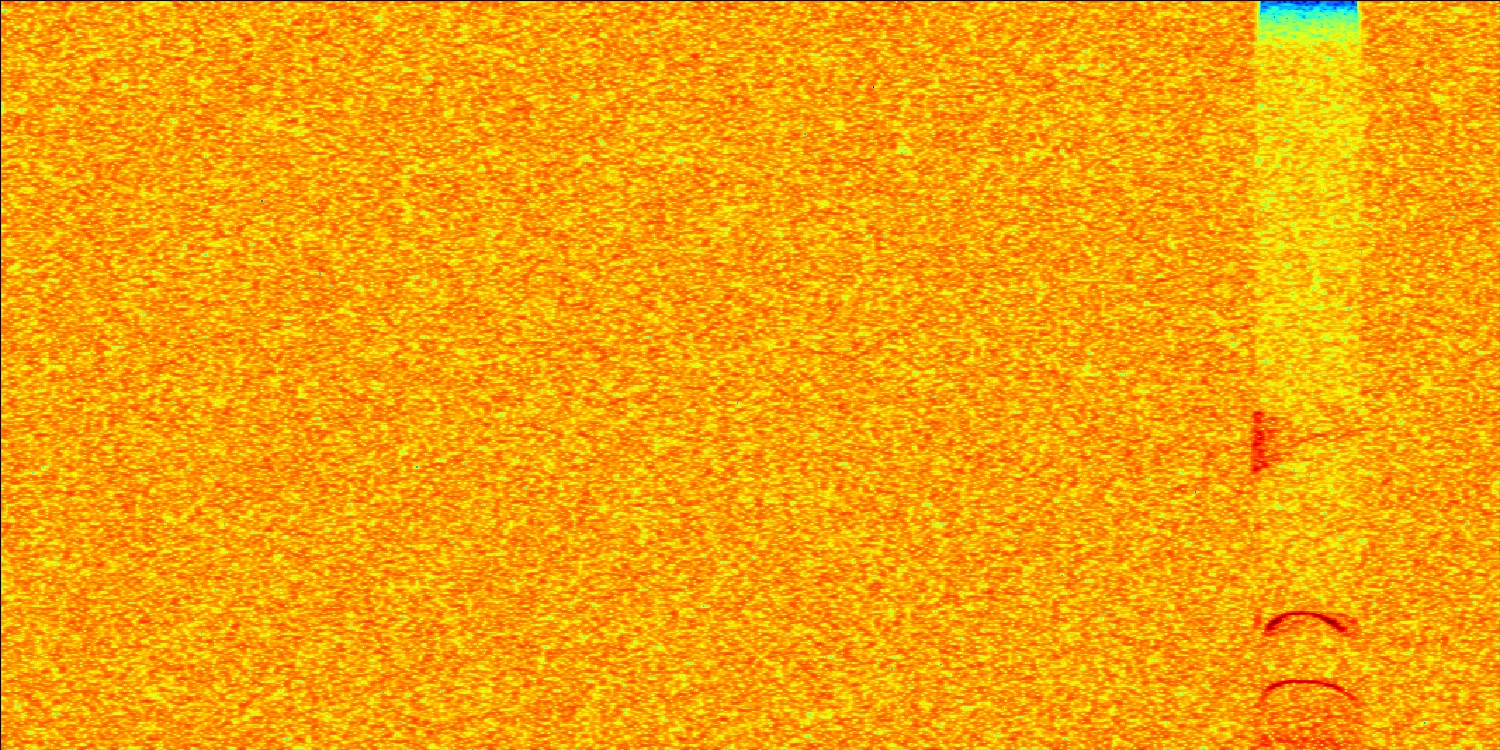

- -See Fig 3 for an example of a padded ROI.

- Map out the Regions of Interest (ROIs).

- -ROIs are what we'll be using to tell yolo what to look at.

- -This is done using a text file with the same name as the image containing the information about the bounding box surrounding the ROI.

- -Each ROI is a Bird Call confirmed by the group leading the research who have noted there is a bird there, and what type of bird it is.

- Feed them into YOLO.

- -You only look once (YOLO) is a state-of-the-art, real-time object detection system.

- -What this means is that it can detect special "ROIs" from a given input image, encapsulating the important part of the image we desire to recognize in a convenient box.

- -For our algorithm, we don't necessarily need the image as much as we need the data it returns: how many bird calls were in our input audio file?

- -YOLO is not some magic system either; it requires data. Lots and lots of data. Which is why we did all those special steps above. After collecting and carefully padding our ROIs, we can now begin to feed all that data into YOLO, teaching the system that it's now meant to recognize these bird calls.

- -While we do not need it to operate in real time currently, there is potential for the algorithm to move that way in the future.

Now, let's take a step by step approach into our algorithm:

Fig 1: Padded Region-of-Interest Example.

Qualitative Results

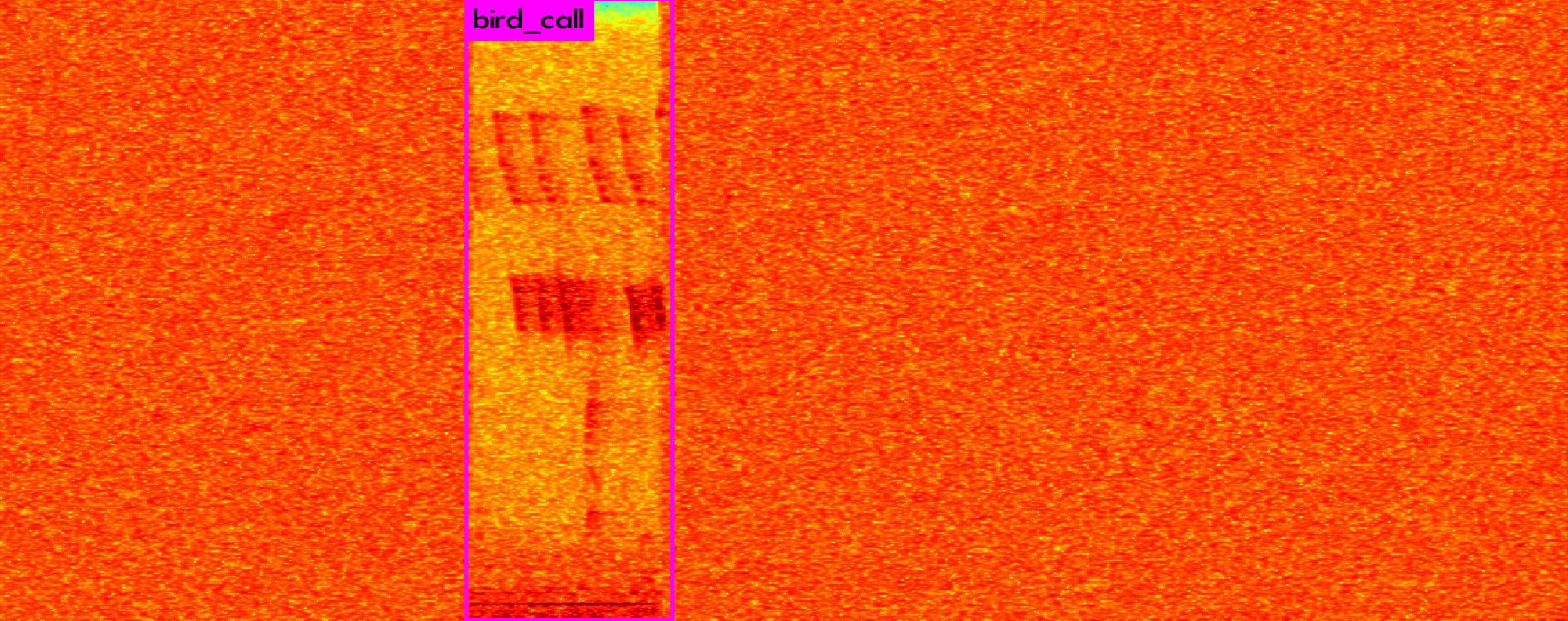

Examples of Successful Bird Calls being recognized: (These are images where we have confirmed there to be at least 1 Bird Call)

|

Examples of Incorrect Bird Calls being recognized: (These are images where we have confirmed there to be NO Bird Calls in them)

|

Quantitative Results:

Fig 2: Confusion Matrix

Fig 3: ROC/RPC Curves

- Avg. Accuracy: 0.72668015

- Avg. F1-Score: 0.7568

Conclusion

We believe our algorithm to be fairly successful, and, if we would be able to take it further, we'd like to integrate some of the further ideas. Such as recognizing what bird is what, training it on a much larger dataset, and possibly even incorporating some form of real-time element. While difficult to understand at first, we rapidly began to comprehend our task and it was a fairly smooth experience. We did need to redo some of our ROI-Padding code, but that was a quick and easy fix.

For more information about YOLO, click here. Of course, special thanks to both Matthew Clark and Shree Baligar for both guiding us and giving us such a great project to work on.