CS 385 : URL-Recognition/Detecting URLs within an image.

Introduction

Goal of URL-Rocognition project is to take the URL of an image and extract the URL as text.

The goal of the project was to be able to extract a URL from an image, while ignoring any other text in the image. This would make it easier to take a link out from some image and send to people or to extract information from a screen shot. The general strategy used to obtain the results we were looking for was to first classify whether a subsection of an image has a URL, using a bag of words features model. Then after determining the subsections, we pre_process the image further and ran optical character recognition to obtain text from the section of interest. Finally we ran another bag of words on the input text, using Naive Bayes model in order to classify whether the text is a URL or not. A big component of the project was to collect images and data for training our models. We were able to automate data collection using the python library Selenium in order collect over 1000 screenshots.

Project Components

- Data Collection

- URL Detector

- Preprocessing

- Optical Character Recognition

- Text Classifier

Data Collection

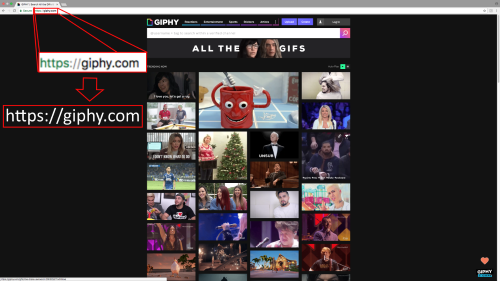

Collecting screenshots by our web crawler.

For data collection, we used the python libraries Selenium and PyAutoGui to visit different webpages and take screenhots. Selenium worked as a driver that could navigate to a given link. We had a collection of 1050 links that were read in the python script for the webdriver to navigate to. This list consisted of 50 links to the most popular webpages as well as 15 different links from each of the originals. Once a webpage had fully loaded, the library PyAutoGui was used to save a screenshot of that page. Using this method, we were able to collect a total of 1050 images for use as training and testing images. All images were collected using the Google Chrome Browser.

URL Detector

The image is preprocessed and sliced into 10 horizontal sections.

This module starts with a matlab script that takes a full sized screenshot, presumably of a webpage and divides it into 10 horizontal slices. These slices were designed to be fed into the next matlab script which trained a bag-of-features model. This bag-of-features model then determines whether or not the given slice is likely to contain a URL. The purpose of partitioning the original screenshot was to increase accuracy. We found that later modules had trouble identifying text in large images. Performing a sliding window, would help reduce the problem size. By only further processing the subsections that are likely to contain URLS acurracy can be improved. This is the first part of a two filter method. This first filter is designed to narrow down our input image so the second filter can quickly and accurately determine the output.

Preprocessing

Image slices determined to contain URL(s) are preprocessed for the OCR.

The Preprocessing receives and image and prepares it for text extraction. The Python script uses OpenCV to read the image and apply the necessary operations. To generalize the input we grayscale the image. Originally, the script used Otsu's method for thresholding but this provided more noise. Upon further investigation we found that resizing the image and applying Gaussian Adaptive Thresholding improved the OCR accuracy.

Optical Character Recognition

The optical character recognition (OCR) component of our project leverages the power of Google's Tesseract OCR, in order to obtain text from a provided image. We used a python wrapper called pytesseract which allowed us to call tesseract functionality within our python scripts directly. Using the image results from our preprocessing step, we were able to improve the OCR accuracy and obtain a list of strings which we then pass to our text classifier.

Text Classifier

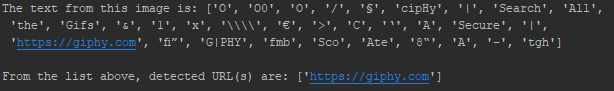

When running our python code on the first slice from our example image, these are the results.

The final step of our URL recognition process involves filtering out non-URL text in order to get just the URL text from an image. The text classifier uses a bag of words model in order to try and correctly classify whether a given text is a URL or not. The classifier treats each character within a string as a 'word', and also considers the length of the string and presence of substrings like 'https', 'www', '.com' etc. Before classification is performed, the text classifier is 'trained' by passing it a long list of input labeled as URL and non-URL strings. From each string, a histogram is created and using the Naive Bayes classifier method, probabilities are calculated which determine whether a text is likely a URL or not. Using a small dataset of training text which we provided via two text files (one with only URLS and the other with non-URL text), we were able to get a fair amount of accuracy in URL text classification.

Example data from URL file:

https://repl.it/@carlosd_/OCRURL

http://www.nltk.org/book/ch06.html

https://stackoverflow.com/questions/

http://www.asciitable.com/

jakevdp.github.io/blog/2013/08/07/conways-game-of-life/

https://stackoverflow.com/1547940#1547940

https://trello.com/b/3jAyVW5A/url-recognition

https://en.wikipedia.org/wiki/Receiver_operating_characteristic

Example data from non-URL file:

A percent-encoded.

octet is encoded

as a character triplet

consisting of the

percent character.

followed by the two

representing that

octet's numeric value.

character serves as the indicator

only appear if it is followed

by two hex digits.Before

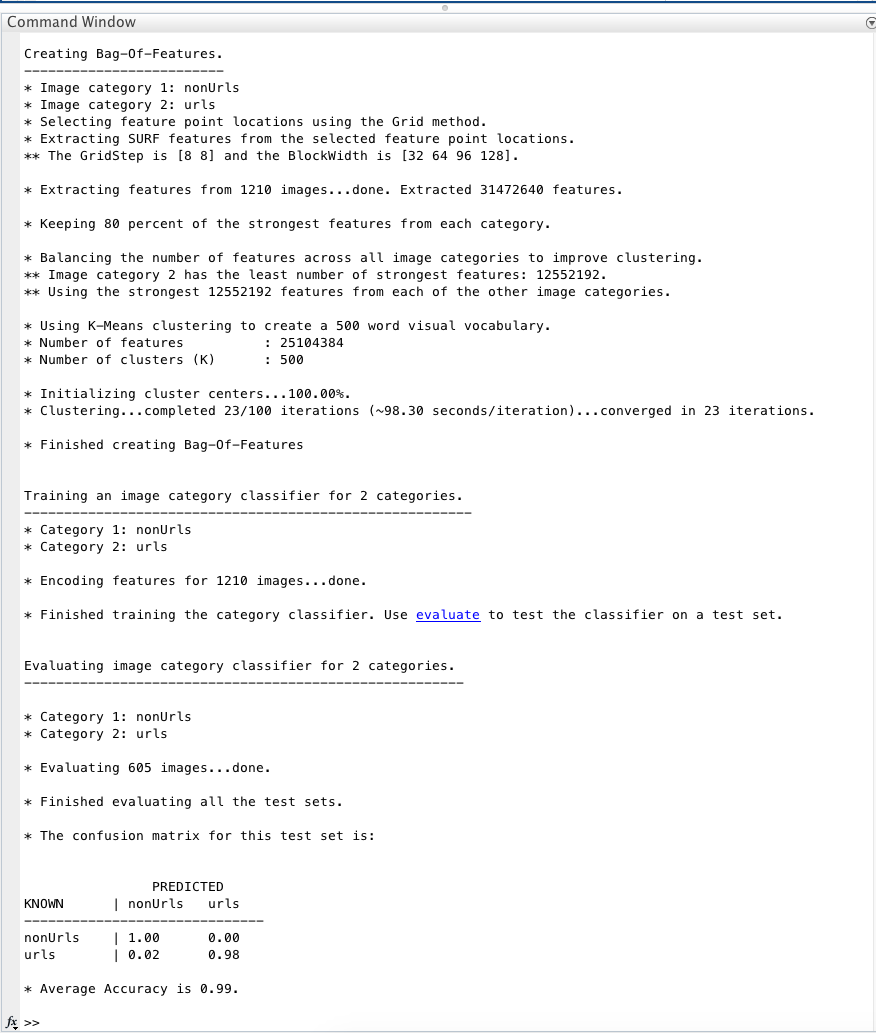

Conclusions

According to the confusion matrix in the image to the left, on our testing data, we were able to get 99% accuracy on True Negatives and 98% accuracy on true positives. It is tough to say whether this is truely due to the extracted features of the text, or from the landmarks in the web browser. Since the model was trained on the same section of our web browser, it is likely that some of the extracted features were things like the back and forward buttons, Search bar and window controls.

The scope of this project was smaller than initially intended. However the core functionallity is still all there. This project is in a position where it can be easily expanded. The next step for the project is to expand the complexity of the input. As it stands, our URL-Detector will only find URLs in a very specific input. This is the main flaw in the system. This can be easily expanded by collecting more screenshots of different browsers, different size screenshots, and of different blocks of text. The module that uses OCR to parse the image text is very accurate. We are most proud of how we were able to sharpen the text of an image to improve OCR performace.