CS 385: Scene Classification using Spatial Pyramid and Random Decision Forest

Sample dataset for scene classification

Introduction

In this project we were trying to find the "best" classifer for scene classification. We implemented a Spatial Pyramid to classify scenes, and improve performance on a bag-of-words representation. A spatial pyramid works by dividing the given image into increasingy smalled sub-regions and computing histograms of the local features found inside each sub-region. Spatial pyramid is more efficient than bag-of-words implementaion because it not only detects what the objects are, but also where in the image they are. We also implemented a random decision forest classifer, which We compared our spatial pyramid results and random decision forest results to the starter codes histogram intersection classifier to find which had the best performance.

Our Approach & Algorithm

The first method we experimented with was using a spatial pyramid. The spatial pyramid implementation is similar to bag of words except that instead of getting the frequency of features in the entire image, we broke the image up into sections, and counted the frequency in each section. This allowed us to not only find the feature we were looking for but to find where that feature is located in the image. Our second approach was using Random Decision Forests. Our implementation methods are described in more detail below.

Spatial Pyramid

We found some spatial pyramid starter code to help us begin the implementation of our project. The code implemented a different classification technique than we had originally planned on using called histogram intersection. We ended up keeping that and also adding our own to compare the results. We added the support vector machine classification technique from bag of words. We got the project working with 2 classes before moving on to multi-class classifiers. In order to identify an image with multiple possible classes, we used 8 support vector machines, each one trained to differentiate a single class from the rest. Then for each test image, all 8 SVMs were run, and the chosen label was the SVM with the highest confidence.

Random Decision Forest

The second method we used was the random decision tree forest. To classify a new object from an input vector, put the input vector down each of the trees in the forest. Each tree gives a classification, and we say the tree "votes" for that class. The forest chooses the classification having the most votes (over all the trees in the forest).

Confusion Matrix Explained

| Category name | Accuracy | Sample training images | Sample true positives | False positives with true label | False negatives with wrong predicted label | ||||

|---|---|---|---|---|---|---|---|---|---|

| Airport | 0.94 |  |

|

|

|

Kitchen |

Sky |

Campus |

Kitchen |

| Auditorium | 0.96 |  |

|

|

|

Airport |

Campus |

Football Field |

Kitchen |

| Bamboo Forest | 0.99 |  |

|

|

|

Sky |

Campus |

Desert |

Football Field |

| Campus | 0.95 |  |

|

|

|

Bamboo Forest |

Football Field |

Desert |

Bamboo Forest |

| Desert | 0.98 |  |

|

|

|

Campus |

Football Field |

Kitchen |

Campus |

| Football Field | 0.94 |  |

|

|

|

Bamboo Forest |

Campus |

Airport |

Auditorium |

| Kitchen | 0.98 |  |

|

|

|

Auditorium |

Campus |

Airport |

Campus |

| Sky | 0.99 |  |

|

|

|

Airport |

Football Field |

Desert |

Airport |

| Category name | Accuracy | Sample training images | Sample true positives | False positives with true label | False negatives with wrong predicted label | ||||

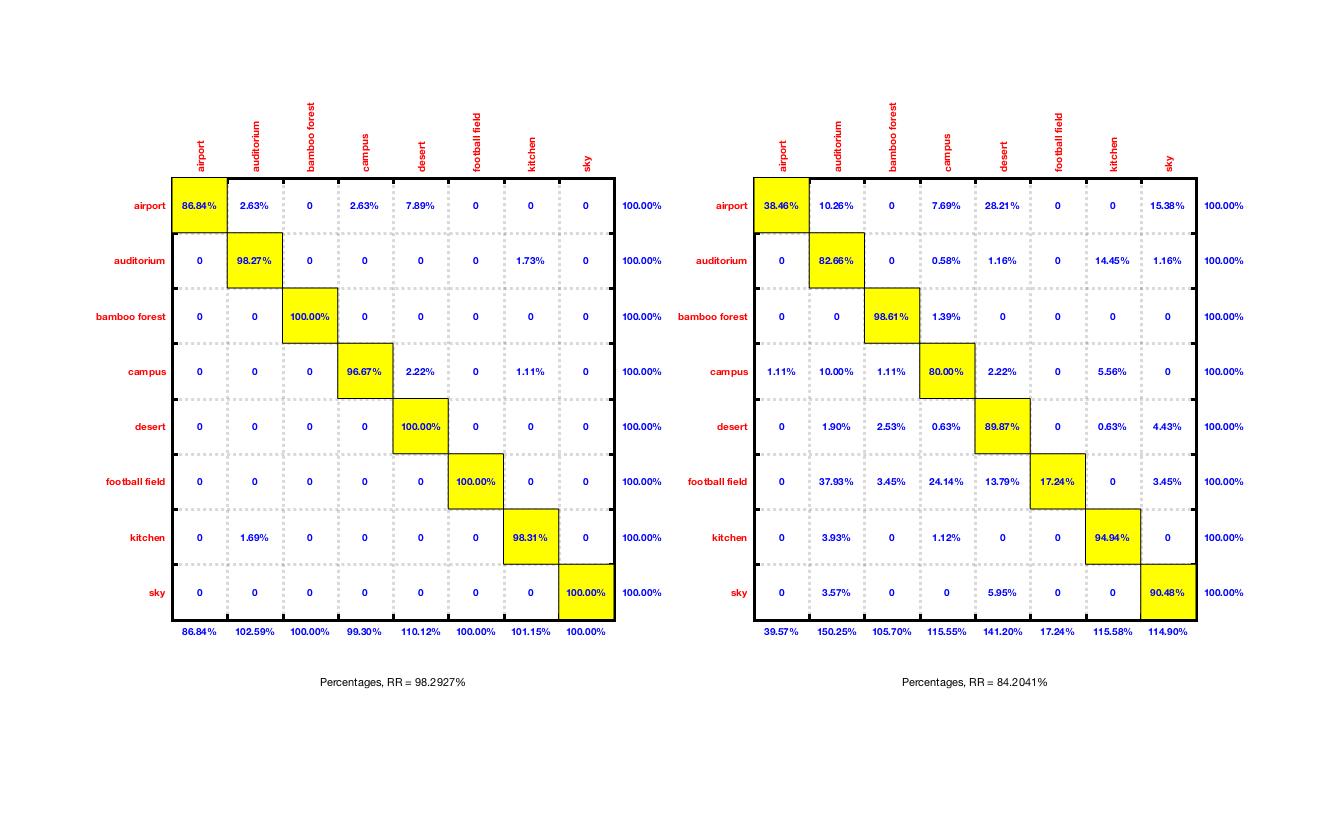

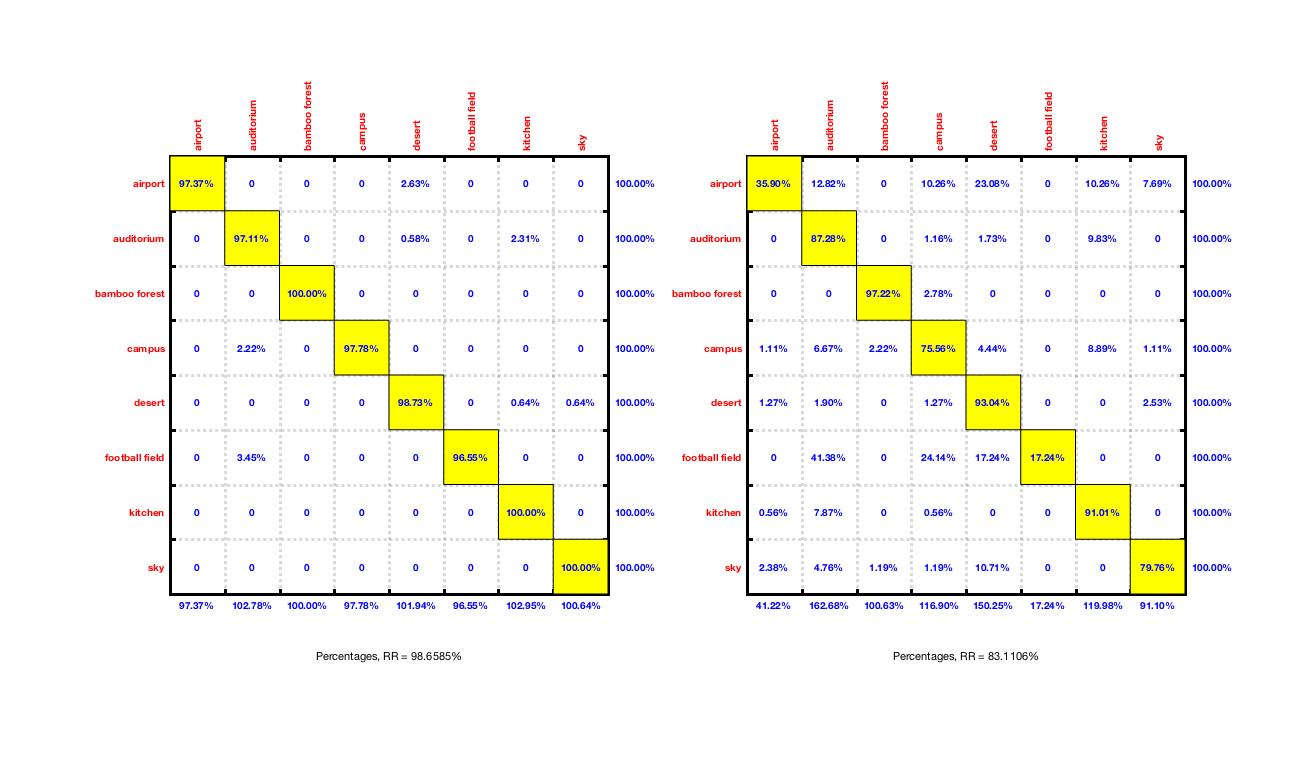

Confusion Matrix Results using Spatial Pyramid: rbf kernel

Spatial Pyramid Level 0: Bag of Words

Training Testing

Spatial Pyramid Level 1

Training Testing

Spatial Pyramid Level 10

Training Testing

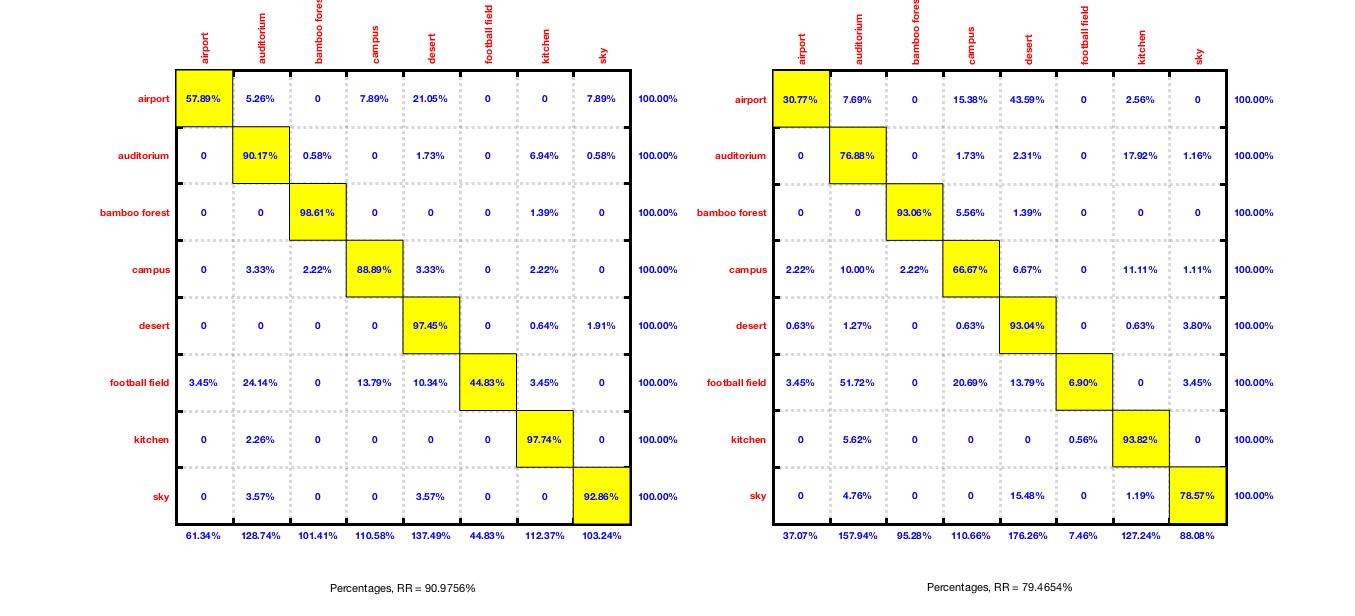

Confusion Matrix Results using Spatial Pyramid: linear kernel

Spatial Pyramid Level 0

Training Testing

Spatial Pyramid Level 5

Training Testing

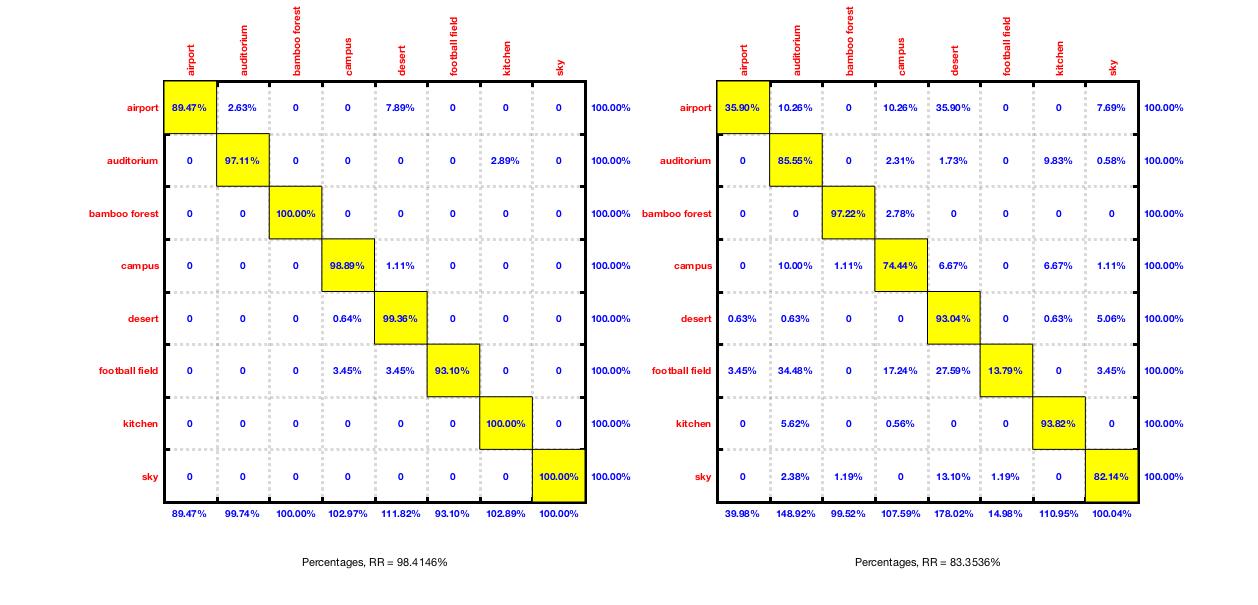

Confusion Matrix Results using Spatial Pyramid: Gaussian kernel

Spatial Pyramid Level 0

Training Testing

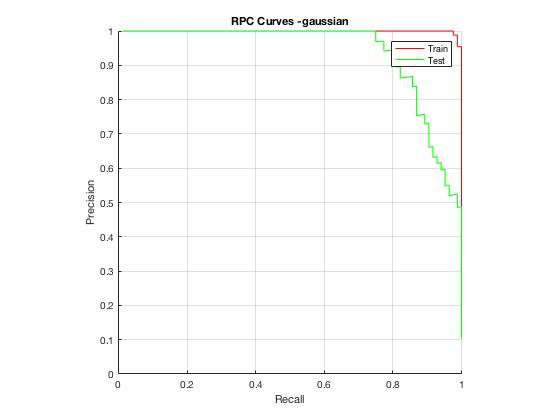

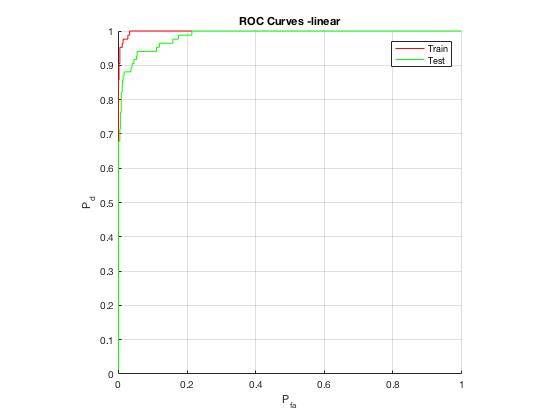

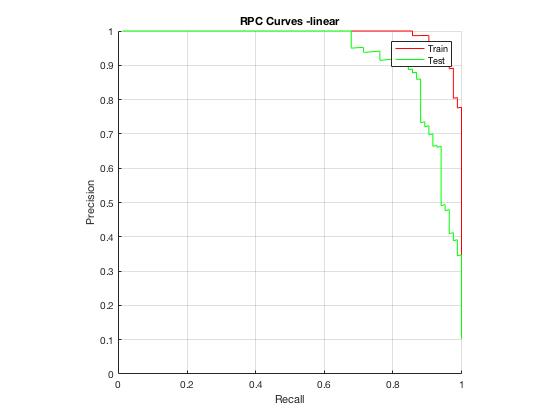

Final ROC and RPC Curves

rbf kernel

Gaussian kernel

Linear kernel

Conclusions

For the Spatial Pyramid, the accuracy increased as the levels increased until to level 5, then the accuracy started going down. We think this is because a level 5 spatial pyramid splits the image into 256 sections, and as the image is divided into smaller pieces, the less likely it is that a test image will be a close enough match to be classified confidently. When we changed the kernels, the rbf showed much better results compared to gaussian and linear. For the random decision forest method, we weren't able to get it running with any reasonable accuracy.

References

http://slazebni.cs.illinois.edu/publications/pyramid_chapter.pdf

http://www-cvr.ai.uiuc.edu/ponce_grp/publication/paper/cvpr06b.pdf

http://www.ifp.illinois.edu/~jyang29/ScSPM.htm

http://web.engr.illinois.edu/~slazebni/research/